Data Decomposition Parallel computing works by using a shared data object with multiple “processors” working on it.

the toy problem

To learn more about Data Decomposition type parallel computing I created a toy application. This entailed creating a demo application that utilized a basic stopwatch instrument, a serial computing class and a parallel computing class. The application will perform row or column based operations on an 8K by 8K array.

Since I needed to time runs I created a basic stopwatch class. This class provides for starting the stop watch, checking elapsed time and stopping it.

the serial code

I created a serial computing class that initializes an 8K by 8K array of integers and has methods for computing across rows and computing down columns. I created these two methods so that I could see potential differences in working with the array via row or column.

The serial class is used as the baseline which the parallel class is compared against.

I wanted to make sure that the results from the serial and parallel calculations were the same. Therefore, I added methods to the serial class that can be used to check that the parallel results match.

the parallel code

Because the implementation language was Java, the parallel code class extends the Thread class. The Thread class works by using a run() method that takes no arguments. To cope with this, I use a private constructor to determine which method to run. The parallel methods create a new instance which takes arguments for if the row or column method should be called and the start and end value of the domain. These arguments set internal variables which are then used within the run() method.

Psuedo-code for the parallel computation:

computeRowsDomainLimited(int startRow, int endRow)

{

Partsize = size / # threads

Remainder = size % # threads

For o = 0 to < # threads

{

Start = o * size

End = start + partSize

computeRowsDomain(start,end)

}

computeRowsDomain(end, end+remainder)

Set up threads with the above

Then run them

Then join/wait for all to finish

}

Results

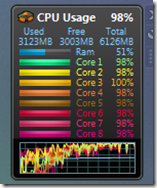

The development machine was a powered by an Intel I7-720 with 6Gig of RAM. The baseline serial methods and the parallel methods of varying partition/thread size were each run 10 times. The best run time is used in the calculations.

The code seems to scale well and perform well for the row based computation up to 8 processing threads. Then it seems to drop off a bit and then gradually taper off in performance. I think this corresponds to the machine having 8 logical cores. After 8 the overhead of the additional threads is greater than any potential benefit.

However, the performance the columns seems to peak out around 4 or 5 threads. This could be because of the CPU having 4 real cores with Hyper-Threading. Or it may be that the overhead of accessing non-continuous memory is the determining factor.

Source Code

Source code for the demo application that utilizes the stopwatch class and both the serial and parallel computing classes can be found here:

http://www.dbBear.com/DataDecomposition.zip

In the future I will implement the same computations using OpenCL.

No comments:

Post a Comment